ACFR Agricultural Ground Vehicle Obstacle Dataset

The dataset - acfr-obstacles - contains calibrated and annotated images, point clouds, and navigation data, collected at different farms across Australia in 2013.

Download the dataset from one of the mirrors:

The dataset was gathered by the agriculture team at the Australian Centre for Field Robotics, The University of Sydney, Australia. Further information about the group can be found at sydney.edu.au/acfr/agriculture

The obstacle dataset was collected using Shrimp - an experimental research platform designed and built at the ACFR.

The robot had a localization system consisting of a Novatel SPAN OEM3 RTK-GPS/INS with a Honeywell HG1700 IMU, providing accurate 6-DOF position and orientation estimates.

A Point Grey Ladybug 3 panospheric camera system with 6 cameras and a Velodyne HDL-64E lidar both covered a 360 degree horizontal view around the vehicle recording images and point clouds with synchronised timestamps.

| Environment | Season | Length | Annotated frames | Obstacles |

|---|---|---|---|---|

| Mango orchard | Summer | 408 m (359 s) | 36 | Buildings, trailer, cars, tractor, boxes, humans, vegetation |

| Lychee orchard | Summer | 122 m (121 s) | 15 | Buildings, trailers, cars, humans, iron bars, vegetation |

| Custard apple orchard | Summer | 159 m (128 s) | 23 | Trailer, car, humans, poles, vegetation |

| Almond orchard | Spring | 258 m (212 s) | 31 | Buildings, cars, humans, dirt pile, plate, vegetation |

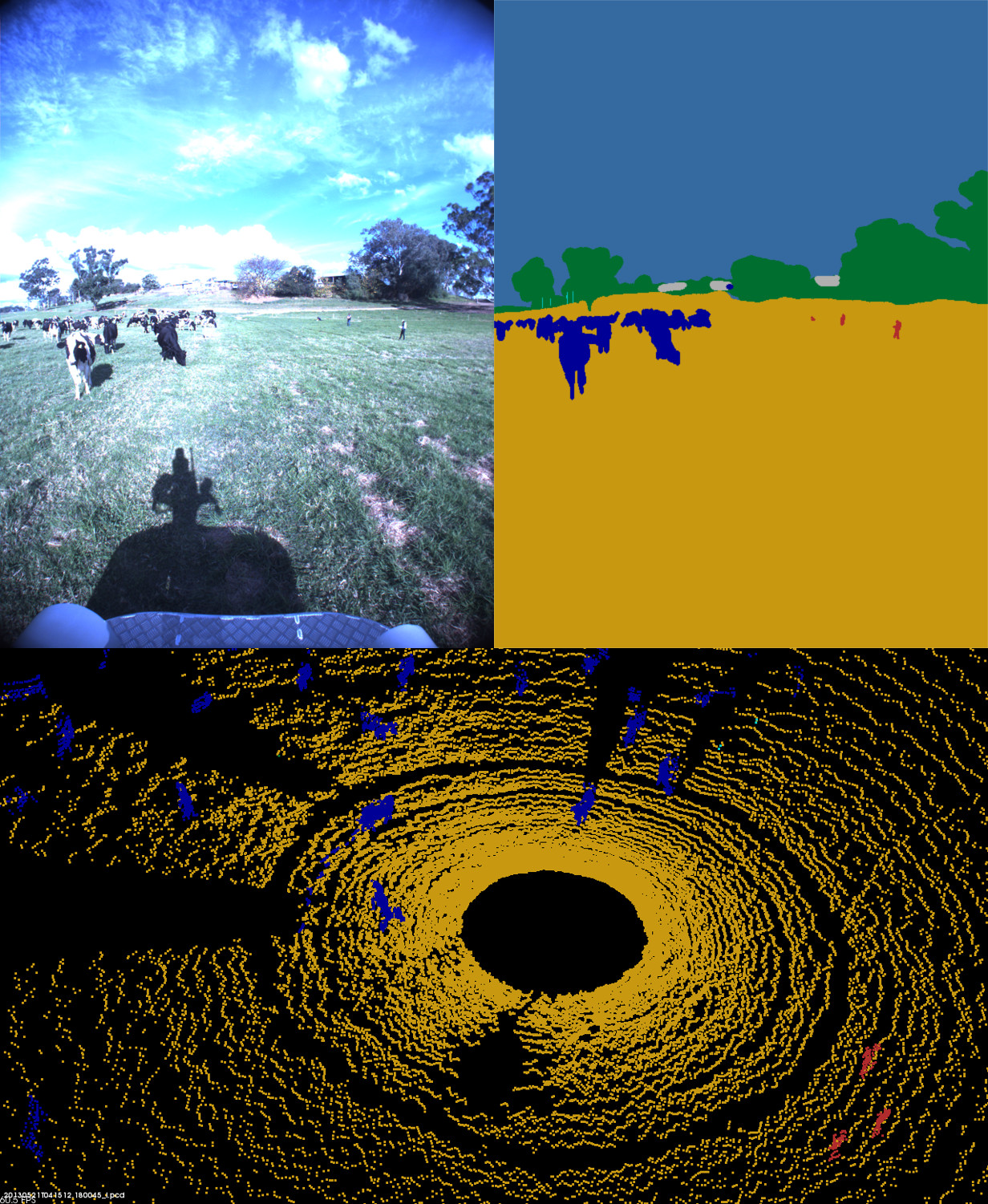

| Dairy field | Winter | 91 m (106 s) | 15 | Humans, hills, poles, cows, vegetation |

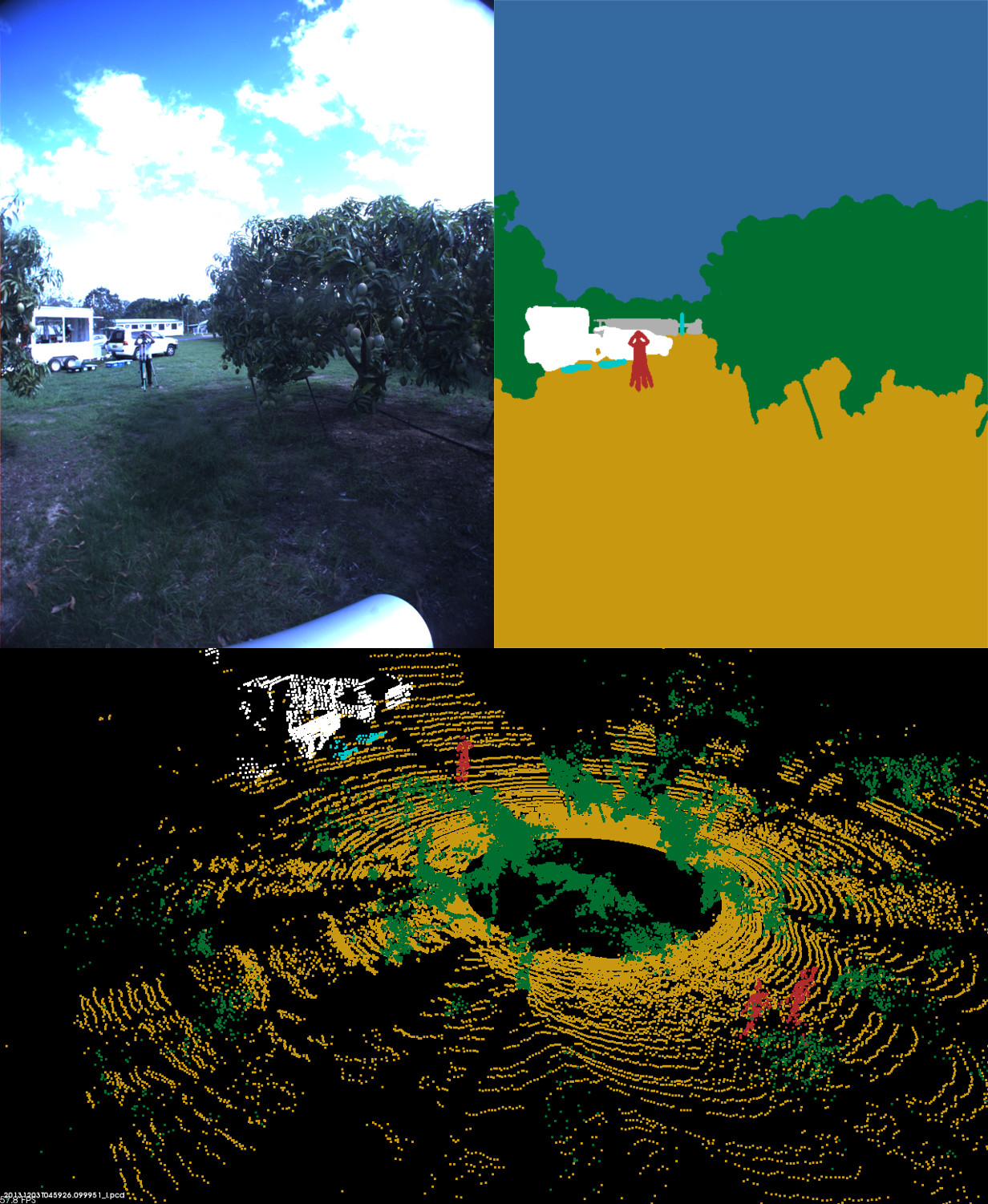

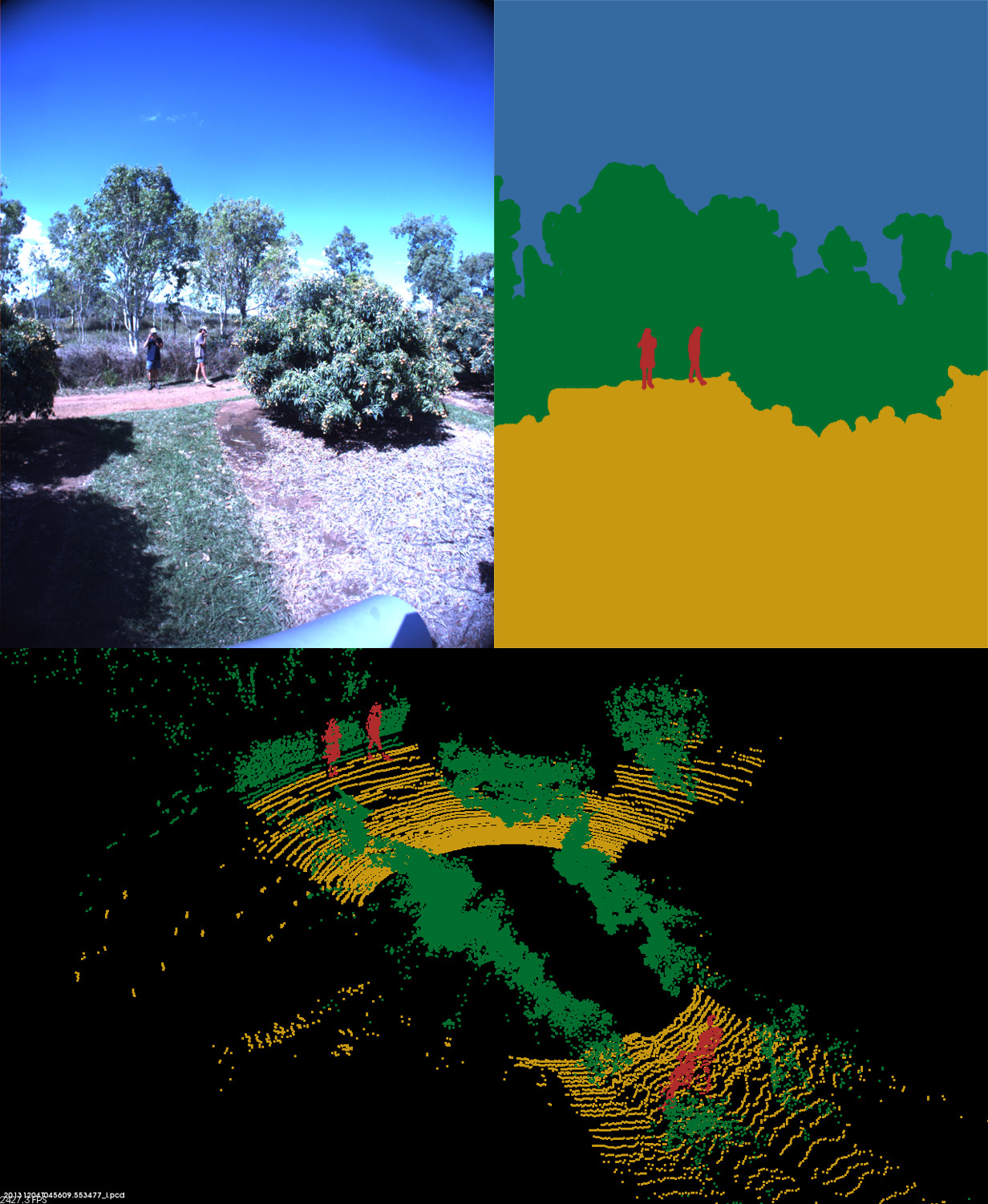

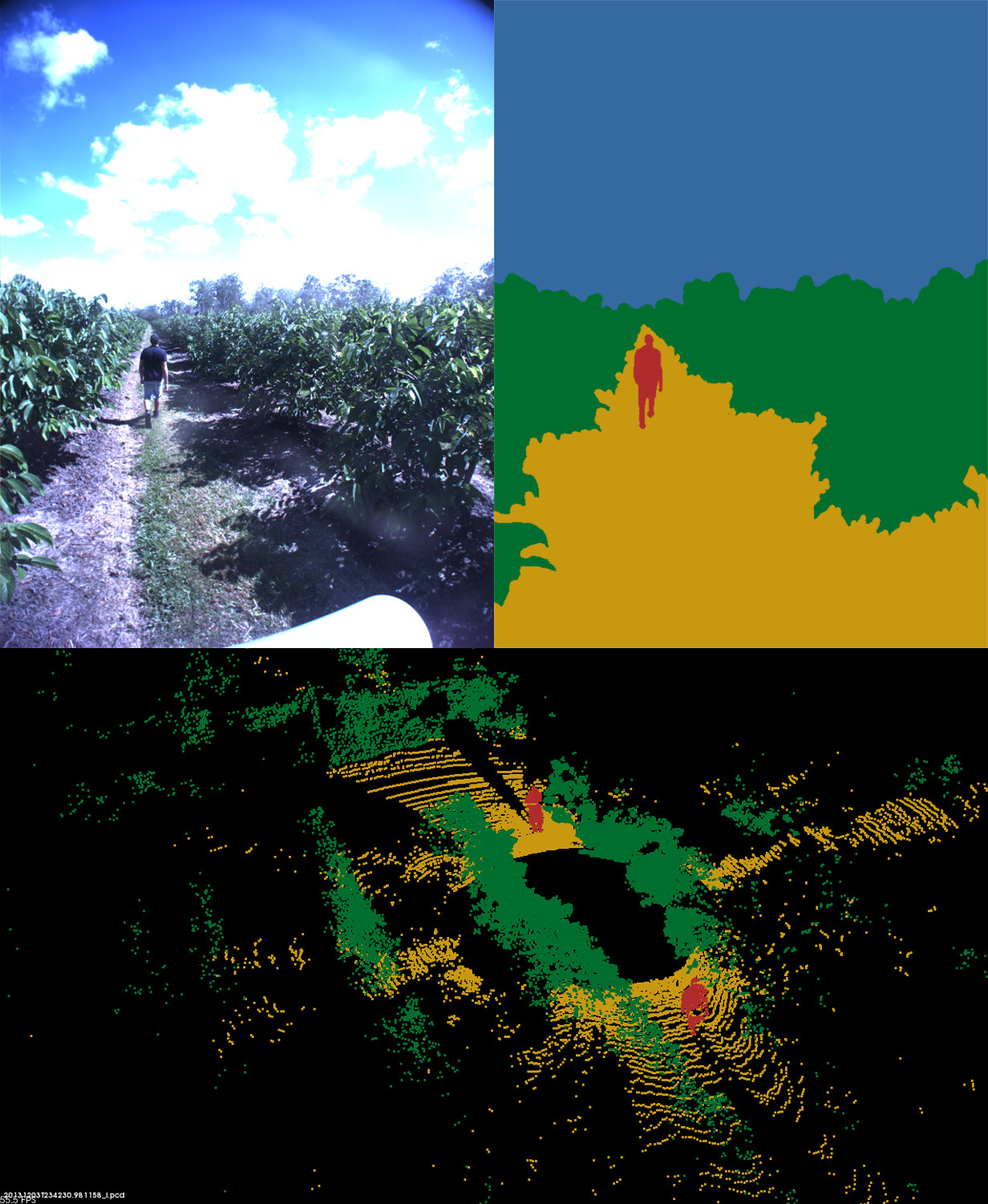

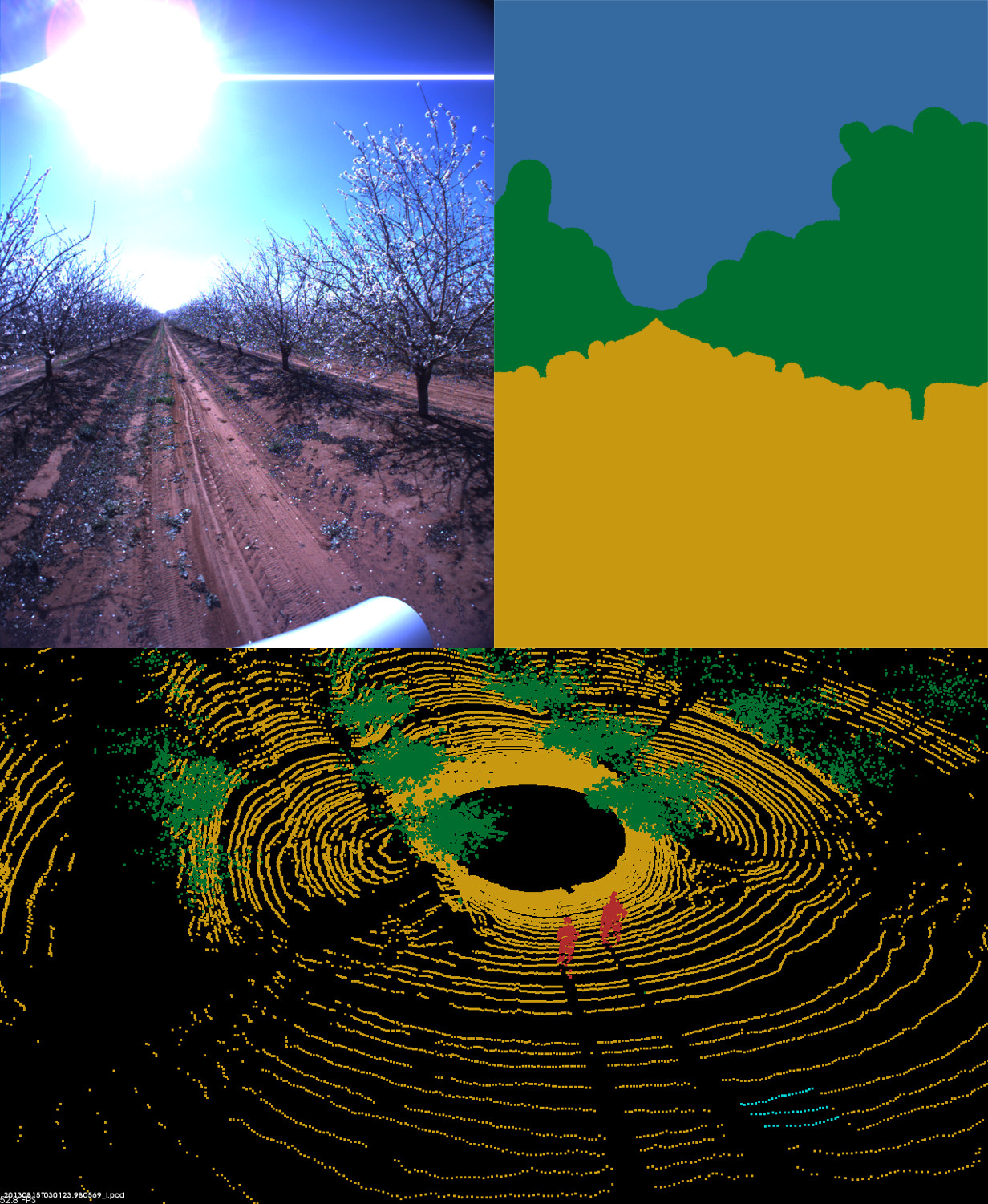

The figures below illustrate example annotations of images and point clouds from each of the 5 environments.

|

|

|

|

|

| Mangoes | Lychees | Custard Apples | Almonds | Dairy |

acfr-obstacle-dataset ├── almonds │ ├── 2013-08-15 │ │ ├── annotations │ │ │ ├── ladybug │ │ │ └── velodyne │ │ │ ├── bin │ │ │ └── pcd │ │ └── data │ │ ├── ladybug │ │ ├── novatel │ │ └── velodyne │ └── 2013-08-16 │ ├── ... ├── custard-apples │ └── 2013-12-04 │ ├── ... ├── dairy │ ├── 2013-05-21_1 │ │ ├── ... │ └── 2013-05-21_2 │ ├── ... ├── lychees │ └── 2013-12-04 │ ├── ... ├── mangoes │ └── 2013-12-03 │ ├── ... ├── config └── readme.txt

Each dataset/environment contains a subfolder named by the date of the data collection.

Inside this folder, a configuration file named "config.json" includes all the transformations between sensors as well as intrinsic parameters for the camera.

Further, an annotations folder contains all annotated (ladybug) images and (velodyne) pointclouds, whereas a data folder contains the raw data of (ladybug) images, (velodyne) point clouds, and (novatel) localization.

The annotated images are available as labeled png files. For converting between RGB colors and class labels, see image_labels.csv inside the config folder.

The annotated point clouds are available in both a pcd format (for Point Cloud Library) and a binary format (for comma/snark libraries). For converting between RGB colors and class labels, see pointcloud_labels.csv inside the config folder

The raw data are available only as bin files. See the usage section below for how to read these.

All annotated images and point clouds are available as png-images and pcd-files (Point Cloud Library).

If only annotations are desired, there is thus no need for installing further libraries.

For accessing raw data, we recommend installation of the comma and snark libraries in Linux:

The raw images, point clouds, and localization data are only available as binary files.

The binary formats for (ladybug) images, (velodyne) point clouds, and (novatel) localization data are available inside each data folder as ladybug.config, velodyne.config, and novatel.config.

A table listing all binary formats is available in binary_formats.txt inside the config folder.

Examples of how to load and visualize these using comma/snark libraries (must be installed):

All images and point clouds were annotated by hand. Images were annotated pixelwise, whereas point clouds were annotated pointwise.

The following class labels were used:

For converting between RGB colors and class labels for both images and point clouds, see image_labels.csv and pointcloud_labels.csv inside the config folder.

When using this dataset in your research, please cite the following paper.

@article{kragh2017multi,

title={Multi-Modal Obstacle Detection in Unstructured Environments with Conditional Random Fields},

author={Kragh, Mikkel and Underwood, James},

journal={arXiv preprint arXiv:1706.02908},

year={2017}

}

For any questions, feel free to contact: